ChatGPT named least reliable work chatbot in new AI reliability report

ChatGPT may dominate the AI chatbot market, but a new report suggests popularity does not equal trustworthiness. A December 2025 study examining how leading AI chatbots perform in everyday work scenarios has ranked ChatGPT as the least reliable option for professional tasks. The findings raise fresh concerns for businesses that increasingly depend on AI tools for daily operations.

The study, conducted by Relum, didn’t just look at specs on paper; they stress-tested ten major AI chatbots in real-world professional scenarios. The results? A massive disconnect between hype and reality.

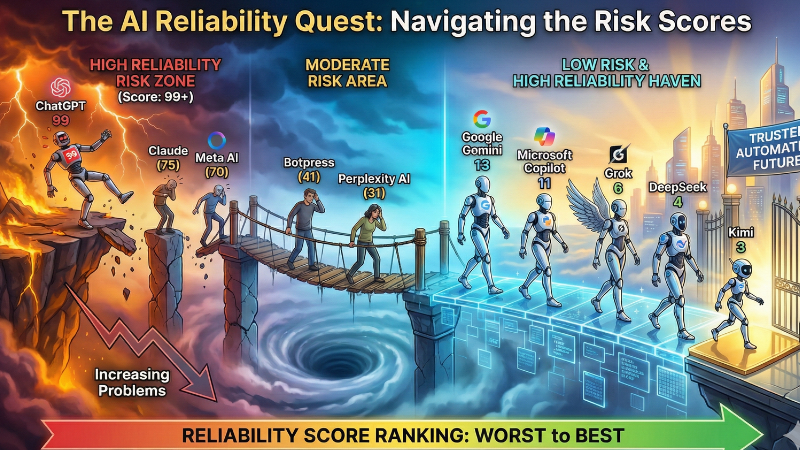

The study assessed each chatbot across four key criteria. These were hallucination rate, customer product ratings, response consistency across tasks, and downtime frequency. Each factor contributed to a composite reliability risk score, with higher scores indicating greater potential workplace issues.

Here is the stat that should keep business leaders up at night: Despite controlling a massive 81% of the market and boasting high user ratings, ChatGPT recorded a hallucination rate of 35%.

In plain English, that means more than one out of every three answers it gives contains fabricated or incorrect information. If you are using it to draft a fantasy novel, that’s fine, but if you are using it for compliance reports or financial decision-making, that is a recipe for disaster. Consequently, the study slapped ChatGPT with a reliability risk score of 99 out of 99, the worst in the group.

Google didn’t fare any better. While Gemini had better uptime, it actually performed worse on pure accuracy, registering the highest hallucination rate of the entire group at 38%. It highlights a weird paradox in the current AI market: the tools we use the most are often the ones struggling the hardest to keep their facts straight.

Claude and Meta AI occupy a murky middle ground. Claude, despite being a favourite for its writing style, ranked as the second least reliable due to frequent downtime and a 17% hallucination rate. Meta AI was more accurate (15% hallucination), but users seem not to like the experience, giving it the lowest satisfaction rating of the bunch (3.4 out of 5).

The “underdogs” – Grok and DeepSeek steal the show from ChatGPT

If the big names are dropping the ball, who is actually doing the work? Surprisingly, the study points to Grok and DeepSeek as the most reliable tools for professional use. They don’t have the massive marketing budgets or brand recognition of OpenAI, but they simply worked better. DeepSeek recorded zero service outages and kept hallucinations to a minimum.

Kimi also scored well, finding a sweet spot between consistency and uptime. Meanwhile, paid options like Perplexity AI were solid but raised questions about whether the subscription cost is worth it when cheaper, lesser-known alternatives are outperforming them.

Relum’s Chief Product Officer, Razvan-Lucian Haiduc, warned that reliability should be a central factor in AI adoption decisions. He noted that around 65% of US companies now use AI chatbots in daily workflows. Nearly 45% of employees admit to sharing sensitive company information with these tools.

As AI becomes more embedded in routine work, the risks of misinformation multiply. Haiduc emphasised that the most widely used chatbot is not always the best fit for every industry. Accuracy, uptime and task-specific performance should outweigh brand familiarity.

The report serves as a reality check for the industry. Trust shouldn’t be given just because a chatbot is famous; it should be earned through consistent, verifiable truth. Right now, it looks like the market leaders have some serious catching up to do.

Leave a Reply